In a world where what you see — and increasingly, what you hear — can be artificially generated, the line between real and fake is blurring fast. Deepfake technology, once a niche experiment among AI researchers, is now a widespread tool capable of mimicking human faces, voices, and emotions with frightening accuracy. As synthetic content becomes more convincing, the value of authenticity — especially in voice and communication — has never been more critical.

In this article, we’ll explore the rise of deepfakes, how they impact everyday users, and why staying connected to real, human expressionis one of the few defenses we have left. Welcome to the Deepfake Reality — and why authentic voice matters more than ever.

What Is Deepfake?

Deepfake” is a term that combines “deep learning” and “fake.” It refers to synthetic media — videos, images, or audio — that have been digitally manipulated using artificial intelligence to make them appear real. These fabrications often involve swapping faces, cloning voices, or mimicking the movements and expressions of real people with uncanny precision.

While the technology was initially developed for entertainment and experimental AI research, this form of manipulated content has quickly evolved into a tool used in misinformation campaigns, fraud, and identity theft. What makes it so dangerous is its realism — the average person may not be able to tell the difference between a genuine video and a convincingly altered one.

As AI models become more advanced and accessible, creating these fake visuals and audio clips no longer requires technical expertise or expensive tools. In fact, some smartphone apps now allow anyone to generate AI-generated content in seconds. This growing accessibility raises serious concerns about truth, trust, and how we consume digital information.

The Evolution of Deepfake Technology

The origins of deepfake technology can be traced back to advancements in artificial intelligence — particularly in deep learning and neural networks. Around 2014, researchers began using generative adversarial networks (GANs) to create realistic images by having two AI models compete: one to generate fakes, and the other to detect them. This back-and-forth battle led to rapid improvements in the quality of synthetic media.

The term “deepfake” itself was coined around 2017, when online communities began sharing face-swapped videos for entertainment — mostly harmless at first. But as the technology matured and became more accessible, its applications quickly expanded beyond fun and creativity.

By the late 2010s, deepfake content had started appearing in political videos, celebrity scandals, fake interviews, and even revenge content. This sparked ethical debates and global concern over how such media could be weaponized.

Today, AI-generated media is no longer limited to tech labs or experts. Open-source tools, mobile apps, and even social media filters now allow millions of people to experiment with deepfake-like effects — often without realizing the risks involved.

The Real Impact of Deepfakes on Everyday Users

One of the most alarming aspects of AI-generated content is how effectively it can erode trust. When users can no longer distinguish between real and fake — especially in videos and audio — the very foundation of digital communication becomes fragile. We begin to question not just what we see, but what we hear.

This technology has already been used in misinformation campaigns, political manipulation, and corporate scams. There have been cases where synthetic voices were used to impersonate CEOs in phone calls, tricking employees into transferring large sums of money — with some attacks costing companies millions.

For everyday users, the threat is deeply personal. Identity theft, harassment, and reputational damage are all on the rise due to manipulated media being used maliciously. Imagine discovering a fake video of yourself saying things you never said — or worse, being unable to prove it’s fabricated. These scenarios are no longer science fiction.

Emotionally, the impact can be devastating. Victims of such attacks often report feelings of violation, fear, and helplessness — particularly when the content is explicit or targets their social credibility. In such a reality, trust becomes a rare commodity, and authenticity more valuable than ever.

The Expanding Use Cases of Deepfakes

As deepfake technology becomes more accessible and sophisticated, its applications are spreading across a wide range of industries — some innovative, others deeply concerning.

Entertainment & Film

In Hollywood and digital content creation, deepfakes have been used to de-age actors, resurrect historical figures, and even create entirely digital performances. While this can push creative boundaries, it also raises questions about consent and artistic integrity.

Politics & Propaganda

One of the most dangerous uses of deepfakes is in political manipulation. Fake speeches, doctored interviews, and synthetic news footage have been created to sway public opinion or discredit individuals — often going viral before they can be debunked.

Cybercrime & Fraud

Scammers are now using AI-generated voices and videos to impersonate trusted figures — such as CEOs, government officials, or even friends — to steal money or sensitive data. These attacks are highly convincing and difficult to detect without forensic tools.

Social Media & Viral Content

Deepfake filters and apps are becoming mainstream on platforms like TikTok, Instagram, and Snapchat. While often used for fun, they blur the lines between entertainment and manipulation — especially when fake content is presented without context.

Education & Research

In more positive contexts, deepfakes are used for educational simulations, language learning, and historical reconstructions. For example, students can watch a lifelike video of Einstein explaining the theory of relativity — something that was impossible just a few years ago.

The Global Response to Deepfakes

🔹 AI-Powered Deepfake Detection

Advanced machine learning models are now trained to detect irregularities in videos and audio — such as unnatural blinking, pixel distortion, or timing mismatches in speech. Tech giants like Microsoft and Google are developing open-source deepfake detection tools to support journalists and content platforms.

🔹 Legislation & Regulation

Some countries have begun drafting or enacting laws to criminalize malicious deepfake use, especially in political or sexual contexts. For example, California passed laws banning the use of deepfakes in political campaigns within 60 days of an election.

🔹 Watermarking & Content Verification

Organizations like the Coalition for Content Provenance and Authenticity (C2PA) are working on digital watermarking systems that can trace the origin and modification history of media files. This helps prove whether a video has been manipulated.

🔹 Media Literacy & Public Awareness

Arguably the most important tool is education. Helping users recognize manipulated content, question sources, and slow down before sharing unverified videos is a critical line of defense. As deepfakes evolve, so must our digital instincts.

Why Authentic Voice Still Holds Power

In a world where faces can be swapped and voices cloned in seconds, authenticity is no longer guaranteed — it’s something we must consciously protect. And while manipulated visuals have existed for decades, AI-generated voice deepfakes now pose an even more personal threat.

Your voice is a signature — rich with emotion, intention, and identity. But modern algorithms can now replicate tone, inflection, and even breathing patterns to create a nearly perfect copy of anyone’s voice. This makes audio-based manipulation not only possible but alarmingly accessible.

That’s why staying connected to our real voices has never been more important. It’s not just about speaking — it’s about owning what we say and how we say it. Tools that allow you to capture, organize, and revisit your own authentic voice can serve as a layer of digital self-defense.

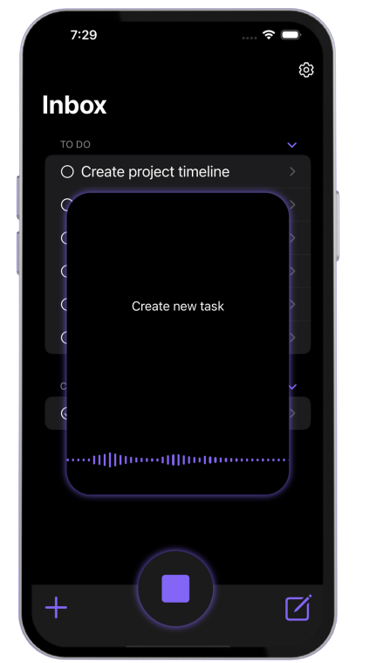

Apps like Vozly — a voice-first to-do list and idea tracker — are built on this very principle. Instead of saving raw voice recordings, Vozly uses speech-to-text technology to transform your spoken thoughts into structured tasks. This approach blends the natural spontaneity of voice with the clarity and organization of written productivity tools.

In a world where synthetic voices and fake audio clips are becoming harder to detect, tools that allow you to capture your authentic thinking process — even in text form — offer a subtle but powerful way to stay grounded in what’s real.